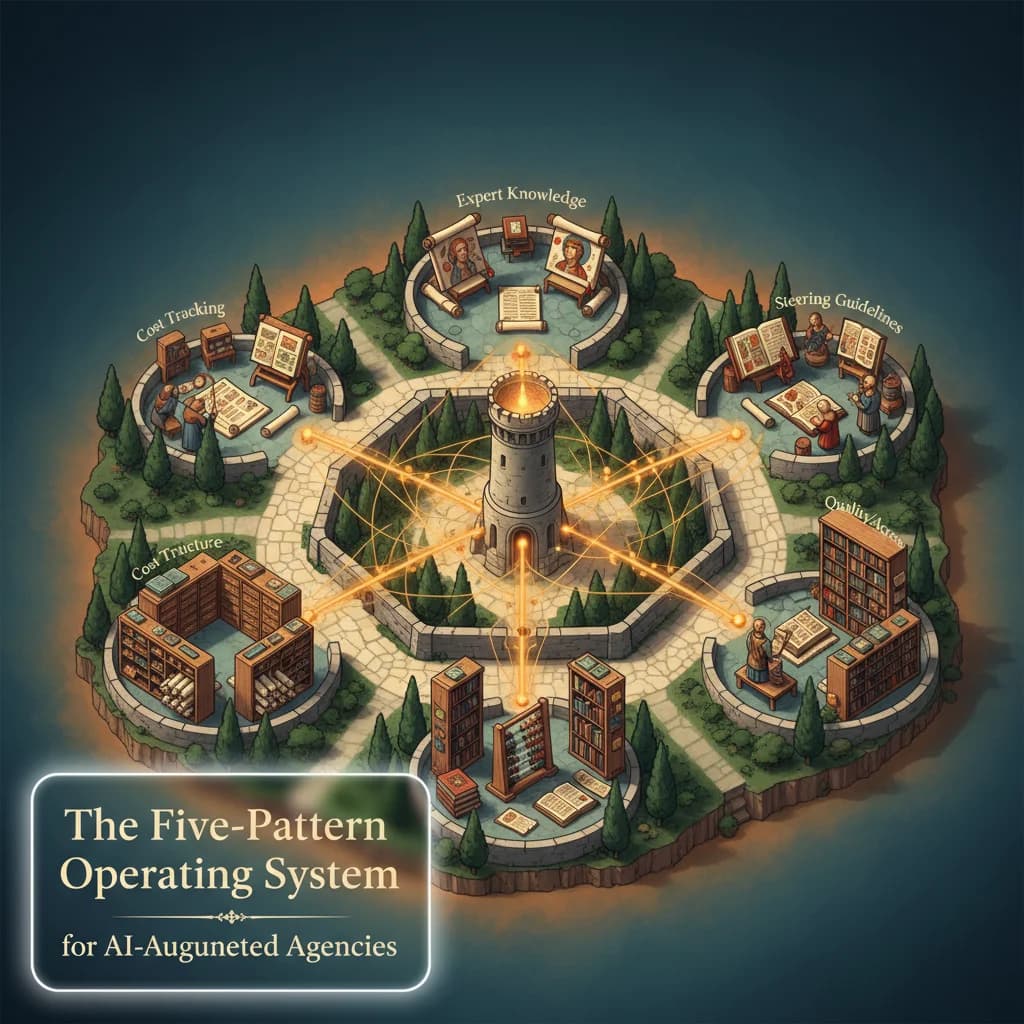

The Five-Pattern Operating System for AI-Augmented Agencies

How one operator with AI can outperform entire content teams. The five interlocking patterns behind Typescape's multi-client delivery system.

Author

We run three clients with one operator.

Not three small clients. Three clients that would normally require dedicated account managers, content strategists, SEO specialists, and community managers. The kind of scope that breaks traditional agencies.

This isn't a productivity hack or a tool recommendation. It's an architectural claim about what's possible when you design AI workflows correctly.

Here's what we've learned: the difference between AI that helps and AI that produces slop isn't the model. It's the system around it.

The Thesis

One operator with AI can outperform entire content teams.

This sounds like marketing copy. It's not. It's the result of eighteen months building delivery systems for trust-first brands in healthcare, legal tech, and professional services.

The insight: Most AI workflows fail because they're missing context. The model is the same. The prompts are similar. But the outputs are 25 points apart in approval rates. Why?

Because the agent running on a minimal prompt has no access to:

- Prior approved work

- Brand voice guidelines

- Steering rules from past rejections

- Research context

- Institutional knowledge

It's blind. So it produces generic output.

The five-pattern system solves this by making everything the agent needs accessible at runtime.

The Five Patterns

| Pattern | Core Insight |

|---|---|

| Expert Knowledge Extraction | Your best people can't document what they know until asked |

| Steering Guidelines | Look for the principle, not the fix |

| Quality Gap | The difference is access, not the model |

| Client Delivery | The folder structure IS the methodology |

| Cost Tracking | One tag prefix = complete attribution |

These patterns aren't independent. They form a reinforcing loop.

Pattern 1: Expert Knowledge Extraction

Your experts know things they can't write down. Not because they're hoarding knowledge, but because expertise lives in "except when" patterns that only surface when challenged.

A doctor doesn't think "I apply differential diagnosis." They just see a patient and know what questions to ask. That knowledge is invisible until you interview them the right way.

We built a multi-agent interview system that does exactly this. The interviewer agent identifies knowledge gaps. The questioner pushes for edge cases. The synthesizer extracts principles.

The output is an "insights codex"—a document of institutional wisdom that becomes prompt content.

Example extraction:

Interview question: When do you deviate from the standard screening protocol?

Expert response: When the patient has a family history of early-onset disease, we skip directly to genetic counseling. The standard pathway misses these cases.

Extracted principle: Family history of early-onset disease triggers an alternate pathway that bypasses initial screening.

This principle becomes a guardrail in every draft about screening protocols.

Without extraction, prompts are generic. With extraction, prompts encode how your organization actually thinks.

Pattern 2: Steering Guidelines

Every draft gets feedback. Most teams treat feedback as a one-time fix: change this word, add this caveat, tone down that claim.

That's a mistake. Each piece of feedback contains a principle that applies to all future drafts.

Steering guidelines extract principles from feedback and make them available to agents at runtime.

The process:

- Draft gets rejected

- Identify the underlying principle, beyond the specific fix

- Add principle to steering guidelines

- Next draft reads guidelines before writing

Example transformation:

Feedback: "Don't call it 'screening'—we use 'evaluation' for this procedure."

Wrong response: Change "screening" to "evaluation" in this draft.

Right response: Add to guidelines: "Use 'evaluation' not 'screening' for [procedure type]. Screening implies population-level; evaluation implies individual clinical judgment."

The second response prevents the same mistake across all future drafts. The first response fixes one document and leaves the knowledge trapped.

Guidelines compound. After six months, a client's steering document contains 50+ principles that no new team member (human or AI) would discover on their own.

Pattern 3: The Quality Gap

We measured approval rates for the same task run three different ways:

| Approach | Approval Rate | What It Has |

|---|---|---|

| Simplified prompt | 60% | Basic instructions, no context |

| Full prompt (in-context) | 75% | Long prompt with guidelines embedded |

| Full prompt + filesystem | 85% | Reads files at runtime: prior work, guidelines, research |

Same model. Same task. 25-point gap.

The difference isn't prompt engineering tricks. It's access.

The 85% approach can:

- Read

content/published/*.mdto see prior approved work - Reference

steering-guidelines.mdfor known pitfalls - Query the brand kit for voice and terminology

- Access research files for claims and citations

The 60% approach is working blind.

Most discussions about AI quality focus on prompts. The real lever is filesystem access. Give your agents the same context a human expert would have.

Pattern 4: Client Delivery Structure

Every client has the same folder structure:

clients/{client}/

├── content/

│ ├── drafts/

│ ├── published/

│ └── research/

├── dashboard/

│ └── weeks/

├── {client}-brand-kit.md

├── {client}-steering-guidelines.md

└── {client}-insights-codex.mdThis seems administrative. It's not. The structure is the methodology.

When an agent needs to reference prior work, it knows where to look. When a human reviews a draft, they know where to find the brand kit. When we onboard a new client, the structure tells us exactly what to populate.

The folder structure enables the quality gap solution. Without predictable paths, agents can't access context. Without context, approval rates drop to 60%.

What each location provides:

content/published/: Examples of approved work (for tone and format)content/research/: Source material for claimssteering-guidelines.md: Accumulated learnings from feedbackbrand-kit.md: Voice, terminology, positioninginsights-codex.md: Expert knowledge from interviewsdashboard/weeks/: Transparency artifacts for client trust

The structure is documentation. The documentation is process. The process is quality.

Pattern 5: Cost Tracking

LLM costs are real. Without attribution, you can't prove ROI. Without ROI, you can't justify investment in better prompts.

One tag = complete attribution.

At week's end, we compute:

- LLM cost per client

- Approval rate by client

- Cost per approved draft

The math that matters:

| Approach | LLM Cost | Revision Time | True Cost per Draft |

|---|---|---|---|

| Simplified prompt | $2.00 | 15 min rework | $12.50 |

| Full prompt | $3.50 | 5 min rework | $8.50 |

The full prompt costs more tokens. But tokens are cheap. Revision time is expensive.

COGS tracking reveals this. Intuition hides it.

Without cost visibility, the 85% approval approach looks expensive. With cost visibility, it's the obvious choice.

How the Patterns Reinforce Each Other

These five patterns form a loop:

Expert Extraction → Rich Prompts → High Approval (85%)

↓

Rejections ← ─ ─ ─ (15%)

↓

Steering Guidelines (capture principles)

↓

Folder Structure (makes guidelines accessible)

↓

Cost Tracking (justifies continued investment)

↓

→ Expert Extraction (next round of interviews)Each pattern depends on the others:

- Extraction surfaces knowledge that becomes prompt content

- Steering guidelines capture learnings from the 15% that fails

- Folder structure makes both accessible to agents

- Cost tracking proves the investment is worth it

- The cycle repeats, with richer prompts each round

Skip one pattern and the system degrades.

What Breaks Without Each Pattern

Without Expert Extraction

Prompts encode general knowledge, not institutional wisdom. Output sounds like every other AI-generated content.

Symptom: "This could be from any agency."

Without Steering Guidelines

Same mistakes repeat. Every new team member (human or AI) rediscovers the same pitfalls.

Symptom: "We gave this feedback three times already."

Without Full Prompts

The quality gap appears. Approval rates drop from 85% to 60%. Revision cycles multiply.

Symptom: "The automated stuff is always worse than manual."

Without Folder Structure

Agents can't find prior work. Research isn't accessible. Brand kits live in random docs.

Symptom: "Where did we put that?"

Without Cost Tracking

You can't prove ROI. Investment in better prompts feels like overhead. The loop breaks.

Symptom: "Is this actually worth it?"

The Stack View

The patterns form layers, from infrastructure to output:

| Layer | Contains | Depends On |

|---|---|---|

| 5. Output | Dashboards, published content, social posts | All below |

| 4. Quality | Steering guidelines, approval flows | Layer 3 |

| 3. Execution | Full prompts, filesystem access, research | Layers 1-2 |

| 2. Knowledge | Expert extraction, brand kit, prior work | Layer 1 |

| 1. Infrastructure | Folder structure, tagging, COGS tracking | Nothing |

Start at Layer 1. Each layer enables the layers above it.

Implementation Order

For someone building this system from scratch:

Weeks 1-2: Infrastructure

- Create the

clients/{client}/folder hierarchy - Establish tag prefixes for cost attribution

- Set up COGS config for shared cost tracking

- Draft initial brand kit

Weeks 3-4: Execution

- Port simplified prompts to full versions with file reads

- Enable agents to read prior work and guidelines

- Add research and corpus access

Weeks 5-6: Quality

- Create steering guidelines from first feedback cycle

- Establish approval flows (Slack, email, or PR review)

- Start logging rejection reasons

Week 7+: Optimization

- Automate weekly dashboard generation

- Conduct first expert interview for insights codex

- Track improvement metrics over time

The Moat

This system is hard to replicate because:

- Knowledge compounds. Steering guidelines get richer with every draft.

- Expertise is embedded. Expert extraction captures what competitors can't copy.

- Infrastructure integrates. Folder structure, tagging, and COGS work as one system.

- ROI is measurable. Cost tracking proves each investment.

A competitor can copy any one pattern. They can't copy the flywheel.

What This Means for Expert Brands

If you're a trust-first brand with deep expertise but no time to create content, this system is what we build for you.

Not generic content. Not AI slop. A delivery system you own, calibrated to your experts' knowledge, that gets better every week.

The patterns work for agencies. They also work inside companies with internal content teams.

The question is whether you'll build it yourself or work with someone who already has.

Next Steps

We're publishing the individual pattern deep-dives over the coming weeks:

- Expert Knowledge Extraction: How to interview SMEs

- Steering Guidelines: The living document that improves every draft

- The Quality Gap: Why 85% beats 60%

- Client Delivery Structure: The folder structure methodology

- Cost Tracking: One tag = complete attribution

Or, if you want to see where you're invisible to AI and whether this system fits your brand: Get an AI visibility audit.